The Evolution of Attention

A reflection on how attention evolved from an RNN add-on to the foundation of modern AI—and what happened after “Attention Is All You Need” changed everything.

Self-Attention and Cross-Attention

It’s useful to refine the notions of attention here. The Transformer uses both cross-attention and self-attention, and they serve different purposes.

Each layer in the encoder does self-attention to evolve the encoded sentence meaning. The tokens in the input sentence look at each other, building increasingly rich representations of what the sentence means.

The decoder does the same. But after each self-attention round, the decoder also does cross-attention on the encoder layers. This lets the decoder keep the encoded sentence fresh in mind—constantly referencing the original input while generating the output.

The Depth of the Architecture

And everything is deep now.

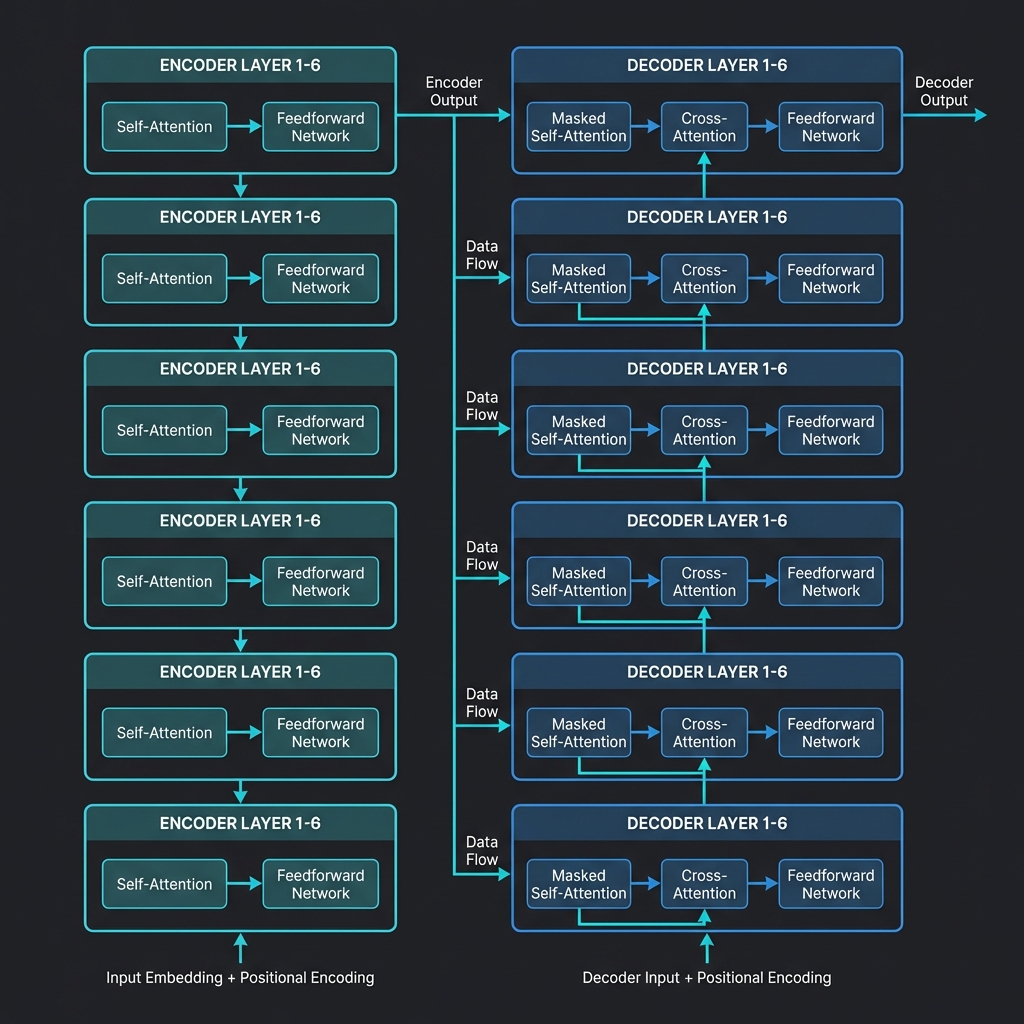

We have 6 layers of encoder, where each layer is: self-attention → feedforward network.

And 6 layers of decoder, where each layer is: masked self-attention → cross-attention to encoder → feedforward network.

There were a lot of things that had to come together. It’s a remarkable paper.

The Design Philosophy

The main point is that all of these design requirements came from trying to get rid of the recurrent network and run all of this stuff in parallel.

And no small part of the trouble came from the way that attention is permutation invariant—it has no idea about sequence ordering. That’s why positional encodings were necessary:

And this worked. Running on all the translation benchmarks, Google had done it. They had shown you didn’t actually need a recurrent network. They had truthfully shown that attention is all you need.

It’s a well-named paper.

The Core Mathematics Persists

The rest of this is mostly an epilogue. “Attention Is All You Need” really nailed down the form of attention as it persists today. There have been performance improvements, but the core mathematics is the same:

Splitting the Transformer

Of course, other things have changed. Dramatically.

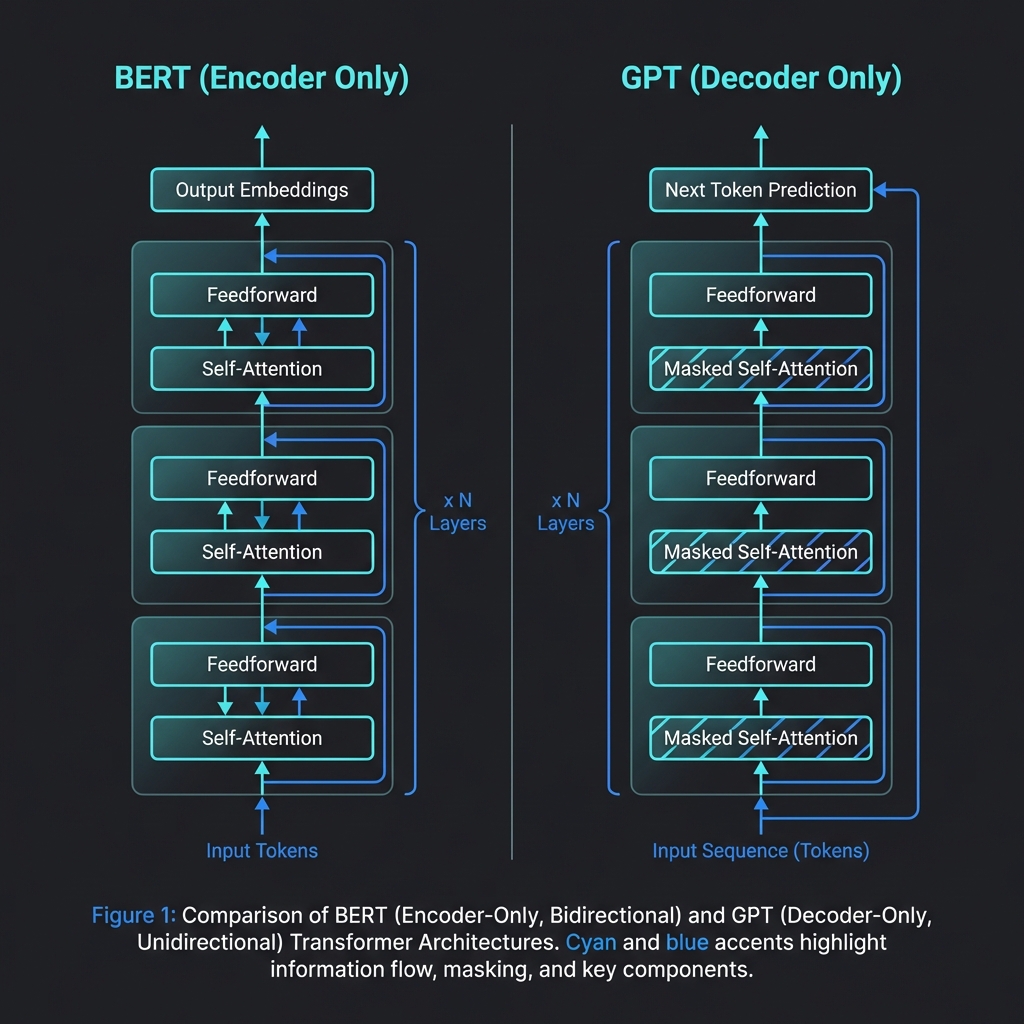

The original Transformer was designed for translation as an encoder/decoder network. Subsequent work started splitting up the Transformer to study the pieces in isolation.

BERT: The Encoder Path

BERT took the top half of the Transformer—the encoder only—and started using it for sentence embeddings. It uses the encoder for producing rich, contextualized representations of text. The sort of thing you could use for sentiment analysis or vector databases.

GPT: The Decoder Path

Meanwhile, OpenAI took the bottom half. They grabbed the decoder from the Transformer and trained it as a generative model.

This move ended up being of some consequence over the following years.

Deeper Understanding

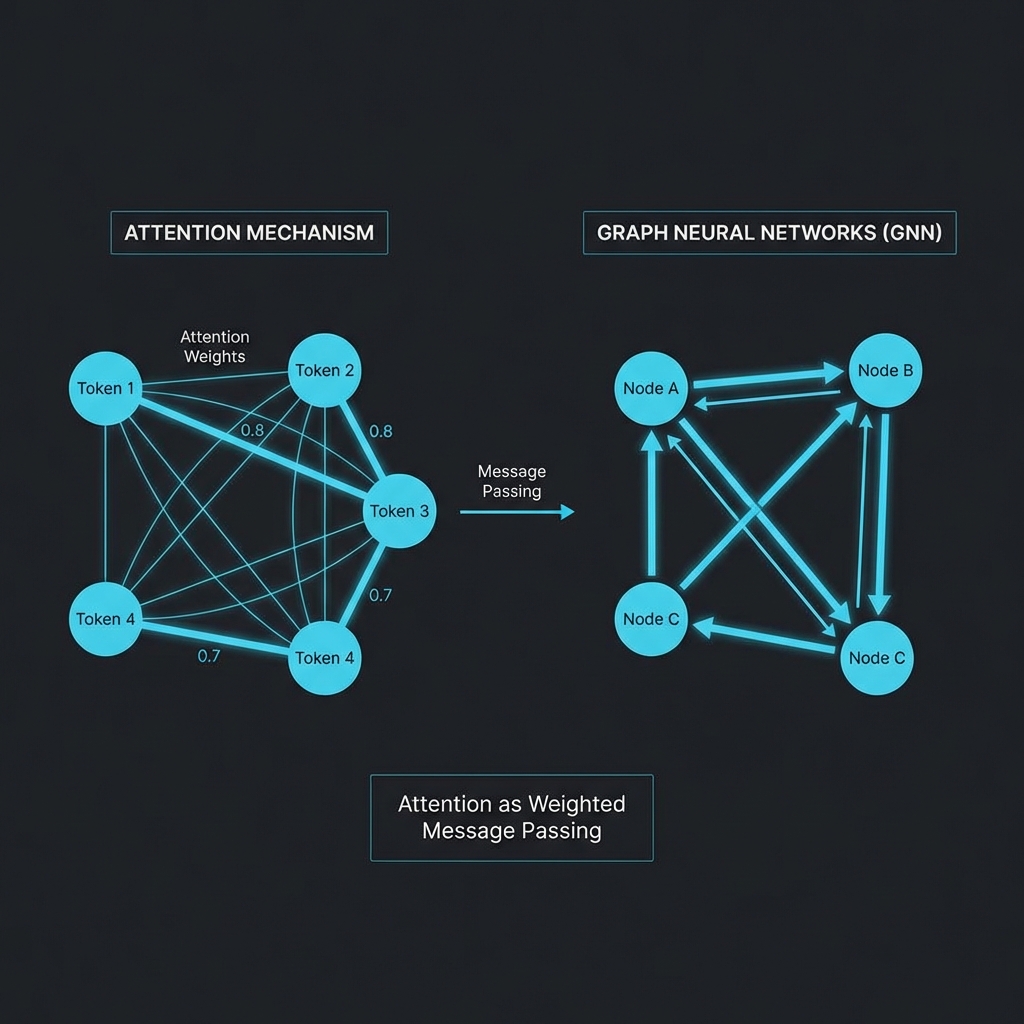

And while the attention mechanism itself hasn’t changed much since “Attention Is All You Need,” our understanding of it has.

There has been some very cool work connecting it to Hopfield networks and models of associative memory. Other work uses attention for graph neural networks.

The Graph Connection

In fact, this connection to graphs and message passing on graphs is arguably the best way to really understand attention. When you see attention as weighted message passing between nodes in a fully-connected graph, the mathematics becomes intuitive.

Closing the Loop

AlexNet set off a huge wave of new research and a revolution in machine learning. “Attention Is All You Need” rode that wave.

But the steps along the way were smaller than they might seem. I should have known better—I’ve always been a strong believer in incremental development.

Attention didn’t evolve out of a vacuum. There is a clear lineage—one problem solved after another. Small steps to intelligence.

Guess that’s always how it goes with evolution, huh?

Thank you to @undebeha. This article is heavily inspired by the Twitter thread that he posted. Please give him a follow!